Introduction

In late 2024, AWS quietly introduced major quota cuts for Amazon Bedrock.

These changes weren’t well-communicated and only became evident when users encountered issues.

My experience is primarily with Anthropic’s Claude 3.5 Sonnet in the Frankfurt region (eu-central-1), but other foundational models and regions have also been affected.

In this post, I’ll break down what happened, the challenges it has caused for my AWS partners and me, and what users can do to navigate these limitations.

I also offer my thoughts on how AWS could improve communication around such changes with high impact in the future.

The topic is still very relevant because the very low quotas for Amazon Bedrock are still in place for all new AWS accounts, so it describes the status quo.

The problem

Quota cuts disrupt AWS partner’s client demo

In early November, an AWS Partner reached out to me for guidance:

The product is a web-based interface designed for enterprise clients; imagine it as a ChatGPT enhanced with features useful to enterpirses and meeting typical large corporate requirements (like user management, data security, etc.).

The GenAI service is built on Bedrock, typically using Claude 3.5. The idea is that each future client would run the service on their own account, so it wouldn’t be available in a SaaS model.

They built a test system that worked perfectly, but at the first live client demo (a week ago), it started throwing errors, with the system indicating that there were too many requests.

They investigated and found the account limits had been reduced to:

- 1 query from 20 queries per minute

- 4,000 tokens from 300,000 tokens per minute

Initially, they thought this was a bug, but based on responses from support, it seems this is a feature by AWS design.

They have tried multiple EU regions (Frankfurt, Dublin, etc)

Provisioned throughput is too expensive…

Quota issues stall project for German AWS partner

About two weeks later, a huge AWS Advanced Partner contacted me with a similar problem:

There is an issue on one of our AWS account with Bedrock, they suddenly blocked the token usage on the models.

Unfortunately in one of the projects we are stuck.

I saw you as a contact person on our end in the opened cases, do you know the reason and how to solve it?

They come up with couple of questions, but I don’t know how to act/commit if there is a cost impact as well.

They already opened a AWS support ticket and the response from AWS support engineer was:

Hello, We would need to collaborate with our service team for a limit increase of this size and before they would be able to assist, we would need you to answer the below questions:

- Model ID (share model ID from this list)

- Commitment Terms

- MUs to be purchased

- ETA for customer to take ownership of the model units once we provision them to their account

- Please make sure to take ownership of the implemented capacity with 7 days from the date of implementation. Capacity will be returned to service account and reallocated if they are not purchased within prior mentioned period.

It seems that both cases are similar, so I decided to look into it!

Verification of the problem in my environment

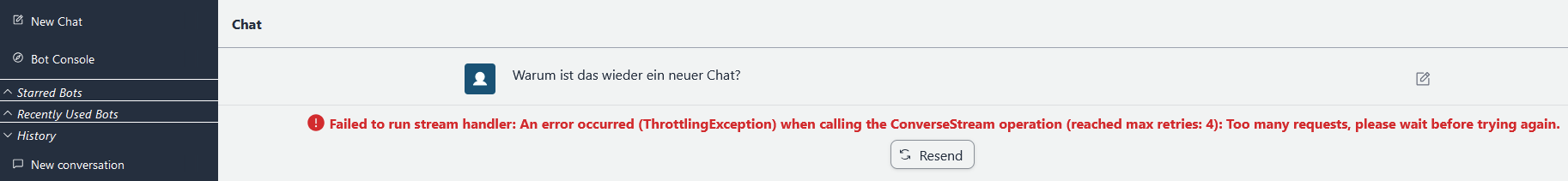

I tested this issue in my AWS sandbox account using my Bedrock Claude Chat app. Unfortunately, the chat bot has stopped working and is throwing these errors:

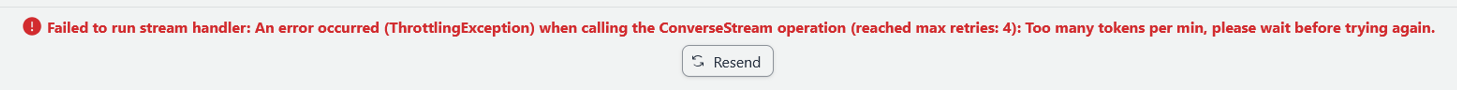

A few weeks ago, when it was first set up, everything worked fine. However, the screenshots show the significant quota cuts:

The quota for On-demand InvokeModel requests per minute was suddenly reduced to 1 (from a default of 20), and the tokens per minute quota was reduced to 2.000 (from default 200.000). These reductions make it impossible to run a chatbot effectively, even just for internal use or demos.

Normally, AWS quotas are adjustable, but as shown in the screenshot and confirmed in this documentation, these specific quotas cannot be changed. I also verified the same limits on my private AWS account, which has been active for 13 years with consistent usage.

What is going on?

Balancing AI growth and AWS infrastructure limitations

AWS’s recent quota cuts appear to be a direct response to the rising demand for Generative AI services, especially in high-traffic regions like Frankfurt. As more businesses integrate AI into their applications, the popularity of advanced models like Anthropic’s Claude 3.5 Sonnet has soared, putting pressure on AWS’s infrastructure. This creates a tough challenge. While AWS continues to promote Amazon Bedrock as a key platform for AI development, the strict quota limits make it hard for developers to properly test or showcase their solutions. These restrictions have led to growing frustration, particularly among AWS partners and distributors, where proof-of-concepts and customer demos are essential for success.

But what happened?

AWS email notification

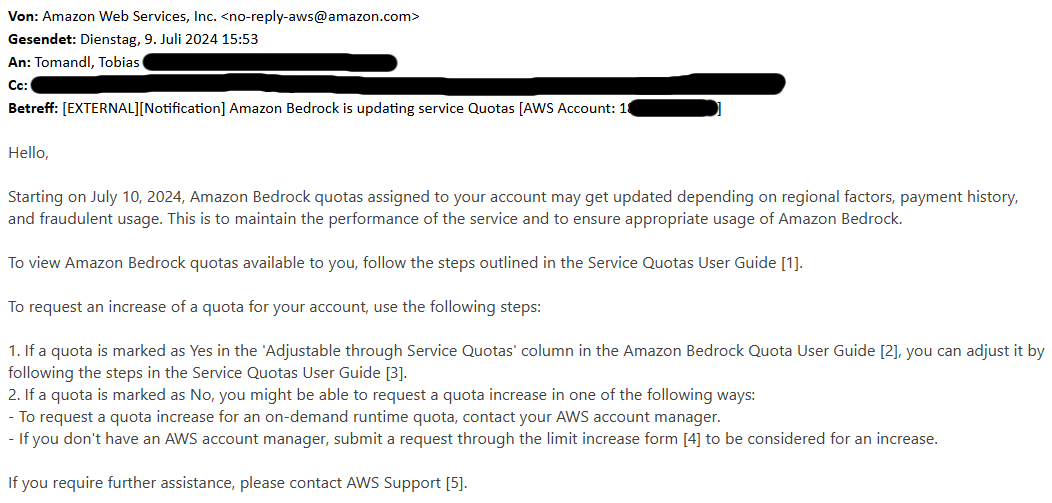

I found an old email dated 9 July 2024 in my Outlook archive:

Communication issues

AWS announced these changes on 9 July 2024, but the details weren’t clearly communicated in my opinion.

- Recipients: The message only reached the root email address of the AWS account, which is often inaccessible to technical audiences. AWS is not responsible for this problem. See below for my advice to AWS users.

- Long implementation period: “The email stated that the changes would take effect one day after the announcement, but for Frankfurt, the implementation was delayed by four months. Many users who received the email had forgotten about it by then.

- Missed recipients: I assume that Bedrock users who signed up for Bedrock after 9 July 2024 did not receive this email, so this group of users (4 month timeframe) is unaware of the upcoming changes. This also applies to all new AWS accounts (per demo/customer) opened after 9 July 2024.

- Lack of clarity on account scoring: The email mentioned payment history and fraudulent usage, causing confusion for users without issue in those areas.

Lack of clarity on account scoring

One of our partners investigated this further with AWS support and discovered that the quota changes were influenced by an internal AWS account scoring system (referred to as the C-score). There is only a handful of blog posts that discuss this topic, like this here. This score, which has not been publicly documented or officially confirmed by AWS, is said to take into account:

- Payment history

- Fraudulent activity

- Monthly recurring revenue (MRR)

- The age and activity of the AWS account

New or fresh AWS accounts, such as those created under AWS Organizations, typically start with a low score, which may explain the stricter quotas. The same is true for sandbox and developer accounts, which typically do not drive consumption. However, none of this information was included in the communication, leaving customers in the dark about how their quotas were determined or how they could improve them.

Follow the suggested AWS way

Contact AWS Account Manager

I estimate that 70% of AWS accounts receiving this email don’t even have an AWS Account Manager (AM) or know who that person might be. Furthermore, AWS AMs are only customer facing. What about AWS partners? I assume that at least 70% of AI development is handled by these specialists. Why is there no mention of their Partner Development Manager (PDM)?

Open an AWS Support Case

In the email, AWS recommends opening a support case. From my point of view, it’s not logical: Quotas are typically handled in the Service Quotas area of the AWS account itself. And if it’s clearly written as: “Not adjustable” (see screenshot above) in the documentation, why is it adjustable via AWS Support? This is not straightforward and causes confusion for AWS users.

Reserved capacity recommendations

In all scenarios I have described, this is just for customer or internal demo / proof of concepts. Even after opening a support ticket, AWS Support recommended switching the model inference type from On-Demand to Provisioned Throughput. Unfortunately, provisioned throughput is very expensive and not suitable for the use case described.

Support case with basic support

For newly provisioned non-productive / sandbox accounts (used for development / demos), AWS support contracts are often not purchased, leaving only Basic Support available. While AWS permits support cases for technical issues under Basic Support, many users are unaware of this option. Both partners I worked with were unaware of this. They felt stuck, thinking they couldn’t get support, and contacted their AWS Distributor, Ingram Micro, for help.

Proposed way forward

The following are my personal recommendations based on my experience and knowledge.

Driving consumption

Based on the AWS C-score system, increasing consumption can improve potential quota limits. Launching a low-cost EC2 instance (e.g., t3.micro) - ideally outside free tier - for a few days can help.

Avoid using new accounts

New AWS accounts often have low quotas. Whenever possible, use established accounts for demos or internal use to avoid drastic quota cuts. Development is often done by an AWS partner. Deploying these demos to new, customer-owned AWS accounts is exactly what causes these problems. Try to develop directly in the customer accounts or deploy the entire demo from the AWS partner’s AWS account, but don’t switch. If you need to switch, drive consumption first.

Rethink the AWS region you need

Demand is higher in some AWS regions, and Frankfurt in particular seems to still have low hardware capacity, so the cuts are greatest there.

If this is development, internal, demo, or even proof of concept, consider moving to the North Virginia AWS region (us-east-1).

The quota cuts there were smaller, in fact my AWS accounts had no cuts at all.

Open a support ticket for quota increase

Even with just Basic Support, you can open a ticket for Bedrock quota adjustments. Important, consider the above three points first! In the section: Account and billing -> Account -> Other Account Issues -> General question I used the following:

Subject: Bedrock quota for AWS member-account 123456789012

…

This account is crucial for a customer demo of an AI chatbot utilizing Claude 3.5 Sonnet in Frankfurt.

The chatbot is functional but repeatedly encounters application errors such as: “Failed to run stream handler: An error occurred (ThrottlingException) when calling the ConverseStream operation (reached max retries: 4): Too many tokens per minute, please wait before trying again.”Upon reviewing the quotas, I discovered that my account’s quotas are significantly lower than the AWS default values:

- InvokeModel requests per minute: 1 (my account) vs. 20 (AWS default)

- Tokens per minute: 2,000 (my account) vs. 200,000 (AWS default)

With these constraints (2,000 tokens per minute and only 1 request per second), I am unable to demonstrate the chatbot.My AWS organization drives significant AWS consumption, and my sandbox account has a long-standing history with no issues such as unpaid invoices or fraudulent activity. As such, I kindly request that the quotas for my account be adjusted to align with the AWS default values:

- On-demand InvokeModel requests per minute for Anthropic Claude 3.5 Sonnet: 20

- On-demand InvokeModel tokens per minute for Anthropic Claude 3.5 Sonnet: 200000

- Region: eu-central-1

This adjustment will allow me to conduct the customer demo smoothly. Thank you in advance for your assistance, and please let me know if further information is required.

Two days later, I had a reply:

… For a limit increase of this type, I will need to collaborate with our Service Team to get approval.

Please note that it can take some time for the Service Team to review your request.I will hold on to your case while they investigate and will update you as soon as they respond…

I’m sharing this detail because the service team will check your account’s C-score, and if it’s still low, they won’t approve it!

Some additional days later, I got this:

… Thank you for your patience while we were working with the internal team.

We have now received an update, the team approved your quota increase request as per the below specs:

123456789012 with limit-name: max-invoke-tokens-on-demand-claude-3-5-sonnet-20240620-v1 is 200000.0.

123456789012 with limit-name: max-invoke-rpm-on-demand-claude-3-5-sonnet-20240620-v1 is 20.0.Please feel free to reply back on the case if you need any assistance and we will be more than happy to help…

After around 2 weeks, my AWS account had restored the default quota again. 😆 I never thought I would be happy with the defaults. 🤣 With 20 requests per minute and 200,000 tokens per minute, the chatbot (for our team) is back in use for non-production, internal (team) purposes, and our two AWS partners have also been unblocked.

My conclusion & recommendations

Amazon Bedrock’s recent quota cuts have caused significant challenges, especially in high-demand regions like Frankfurt. These changes have disrupted demos, proof-of-concepts, and internal projects for AWS partners and distributors, leading to frustration. Given that Generative AI is an emerging field, this issue deserved more attention from AWS. In my opinion, better communication from AWS upfront could have helped reduce this frustration.

My recommendation to AWS users

Even today, all new AWS accounts and existing AWS accounts with an inactive Amazon Bedrock service have lower service quotas!

Read this article to understand what you can still do to get what you need - without switching to expensive provisioned throughput mode for non-production use cases.

I see this every day in my job: the emails sent from AWS to the root user’s email address are not going to the right destinations; often the emails go to functional mailboxes, only a few people have access to them, and no one takes care of them.**Establish internal processes that ensure users and developers are informed**, especially if **action required** is in the subject line or seems highly relevant!

I also highly recommend setting an operations contact for each AWS account, which — if set — has been copied in this case, and here you can specify your technical teams (and this person - if the company is larger and this is still “just” an admin - can inform the affected teams).

My recommendations to AWS

While these adjustments may have been driven by capacity constraints, the way they were introduced and communicated leaves room for improvement in my view. I have thought about this and think it can be narrowed down to the following points:

- Clear Communication: Future changes should be announced via an official AWS blog, accompanied by detailed emails. AWS also overlooked the impact on AWS partners who handle demos but rely on customer-owned accounts. Provide a clear timeline for implementation. An official blog post such as here or here is recommended to ensure that all users and AWS partners are well informed and prepared.

- Time to implementation: As mentioned in #1, a clear timetable is essential. Waiting four months between announcement and implementation is disruptive. People will assume it was already implemented and won’t remember the old email. Perhaps a 2nd official email would be useful in this case too.

- Transparency: Provide more transparency on how quota reductions are calculated. Include details that allow an individual user to estimate what the target quotas will be. No one anticipated a 20-fold reduction!

- Harmonised quota adjustment process: It’s unclear why some quotas can’t be adjusted through the standard process and instead require a support ticket. This lack of transparency is confusing. If possible, AWS should allow quota adjustments through the standard Service Quotas interface. If a support ticket is required, the approval process and requirements should be clearly explained. Additionally, if a quota is marked as not adjustable, it should actually not be adjustable. Currently, it feels like a guessing game to determine which quotas can be extended after approval by the AWS service team.

- Slighter reductions: The drastic 95% quota cuts - even for existing accounts actively using Amazon Bedrock - are very disruptive. Consider less disruptive changes, or limit the changes to new AWS accounts or accounts that don’t have access to Bedrock application or even the affected foundational model (FM).

- Support for AWS partners: Recognize the critical role of AWS Partners and Distributors, and consider the impact on them when implementing such changes. This can also be included in regular Amazon Partner Network (APN) newsletter or in a AWS PartnerCast to provide advance notice. (A single email to the root user of the AWS account is NOT sufficient.)

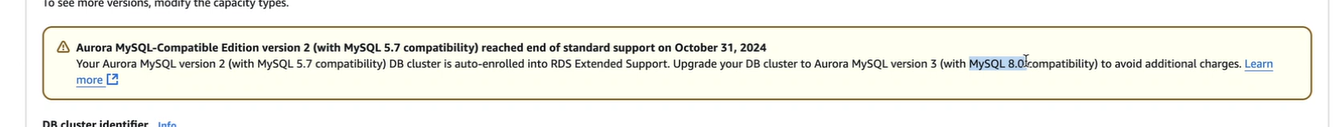

- Pop-up in the AWS-Console: Sure, it’s a little annoying for day-to-day use, but these important and disruptive changes can also be announced directly in the Amazon Bedrock console, as shown here:

This ensures that developers — the affected audience — receive the information. And they need it!

This ensures that developers — the affected audience — receive the information. And they need it!

By addressing these areas, AWS can enhance the experience for its users and partners, rebuild trust and and better support its growing community of users and partners.